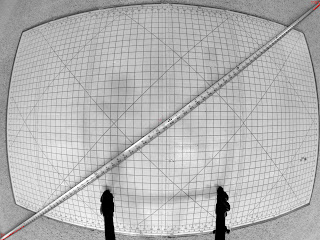

GoPro Hero3 Black Multi-Camera Timing

In the previous post , I explored the field-of-view of a GoPro Hero3 Black camera. In this post, I explore the timing of two cameras, both paired with the same remote. I set the cameras to 848x480 240Hz video, pointed them at a kitchen timer that has an LED blink once per second, and recorded. Here are four images from each camera, composed together so you can see how the timing varies: So, accurate to within 2 frames at 240 Hz - only about 8 milliseconds or so of difference. Should be good enough for my purposes. The bigger concern is the color shift apparent between them. Note that the bottom image seems a little more saturated than the top. Probably correctable, but annoying.